Transparent Two-level Classification Method for Images

We present a new method that makes the classification of images more transparent.

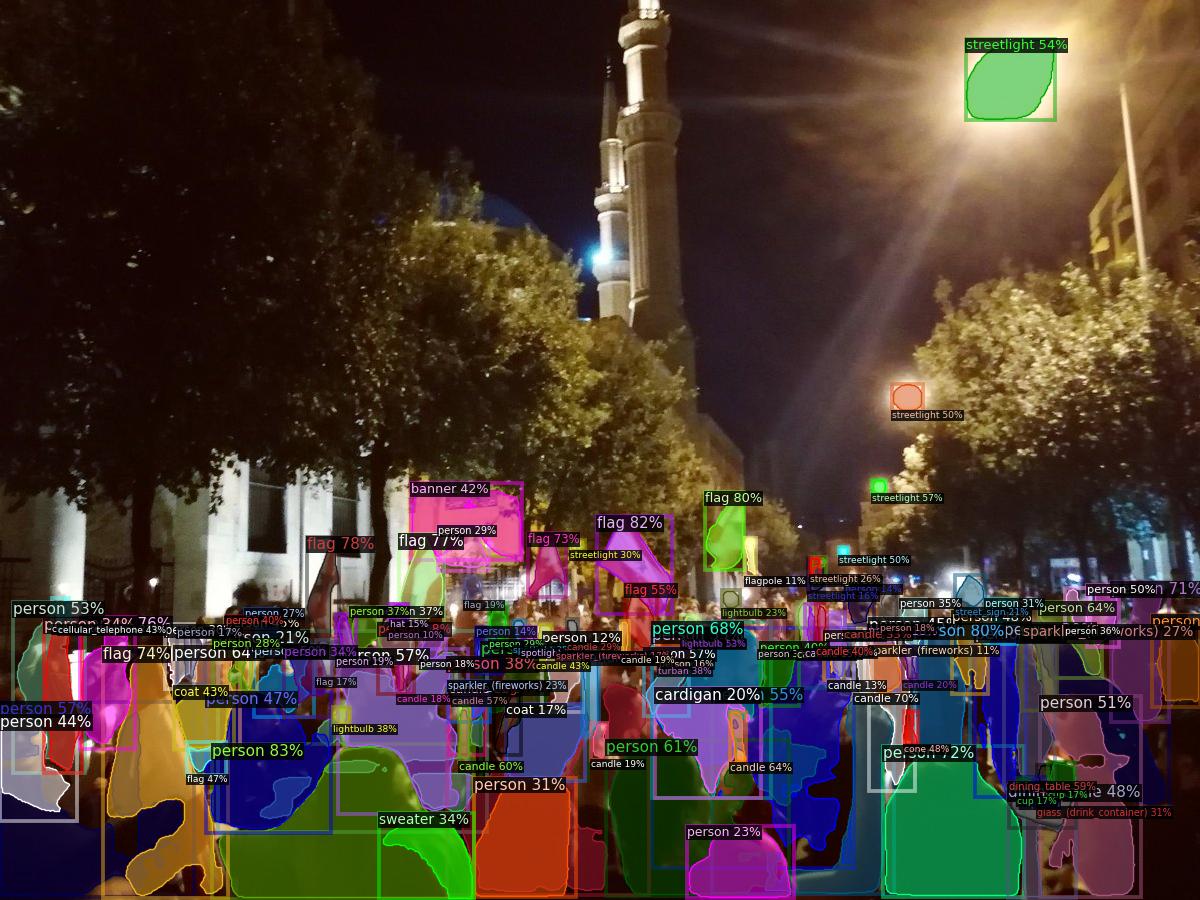

While existing classifiers for images reach high levels of accuracy, it is difficult to systematically assess the visual features on which they base their classification. This problem is especially pressing for complex images that contain many different types of objects. Our method detects objects present in images, creates feature vectors from those objects and uses them as input for machine learning classifiers. We tested this on a new dataset of 140,000 images to predict which ones show protest. The accuracy is roughly on par with popular CNNs. The novelty of this method is that it provides new insights for comparative politics: While persons, flags and signboard are important objects in protest images, particular features of protest differ across countries and protest episodes. Our method can detect these.

Research Article

Further information on the method can be found in the following research article.

Replication Materials

The replication code, model weights, and data for this article have been published on GitHub and the Harvard Dataverse.

Demo

If you just want to try out our method with a couple of your images, we recommend you to view the demo. The demo allows you to upload an image, define a vocabulary of objects, an aggregation, and returns the segmented image and the respective feature vector. The demo can be used via an interactive demonstration application with reduced functionality or via an application programming interface using Hugging Face.